PYTHON SCRIPT FOR AUDITING ROBOTS.TXT

Before one year i wrote different methods to exploit robots.txt file; you can find it here. Sometimes, due to weak directory permission you can get into dis-allowed directory from robots.txt.This python script check the HTTP status code of each Disallow entry in order to check automatically if these directories are available or not.For Original article click here.

It require python3 and urlib3 module.

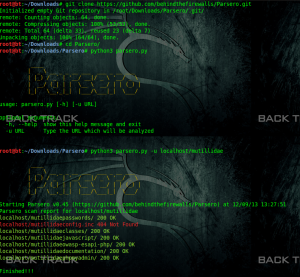

git clone https://github.com/behindthefirewalls/Parsero.git

cd Parsero

python3 parsero.py -h

python3 parsero.py -u localhost/mutillidae

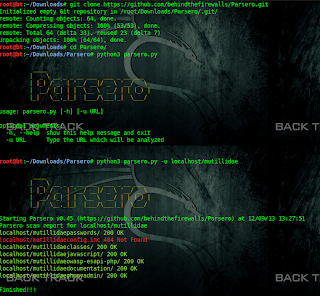

cd Parsero

python3 parsero.py -h

python3 parsero.py -u localhost/mutillidae

Now you can see that which dis-allowed directory is allowed , it means for which we got HTTP-status code 200.

Comments

Post a Comment